Tactile Learning

Dexterous Manipulation with Tactile Information

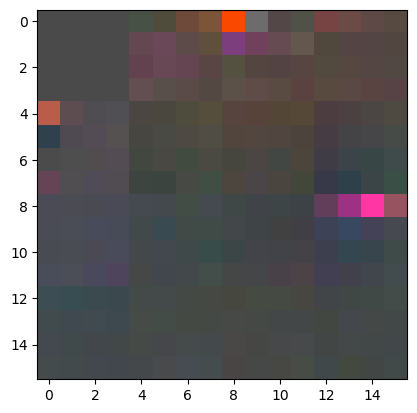

This is a dexterous manipulation project by mainly using tactile sensors. Our setup consists of a Kinova robotic arm and Allegro hand integrated with Xela touch sensors. With this setup we use VR headsets to control both the kinova arm and allegro hand to colelct demonstrations. Then use self-supervised learning algorithms to learn a good representation of the environment. Representations can help us completing tasks with non-parametric imitation learning algorithms.

Engineering for the Kinova arm and Allegro hand is done by using Holo-Dex framework. Xela sensors are integrated to this framework. Even though the code is not public for that yet, it will be soon. An example demonstration with this setup could be found here.

Github Repository for learning framework of this project can be found in tactile-learning.

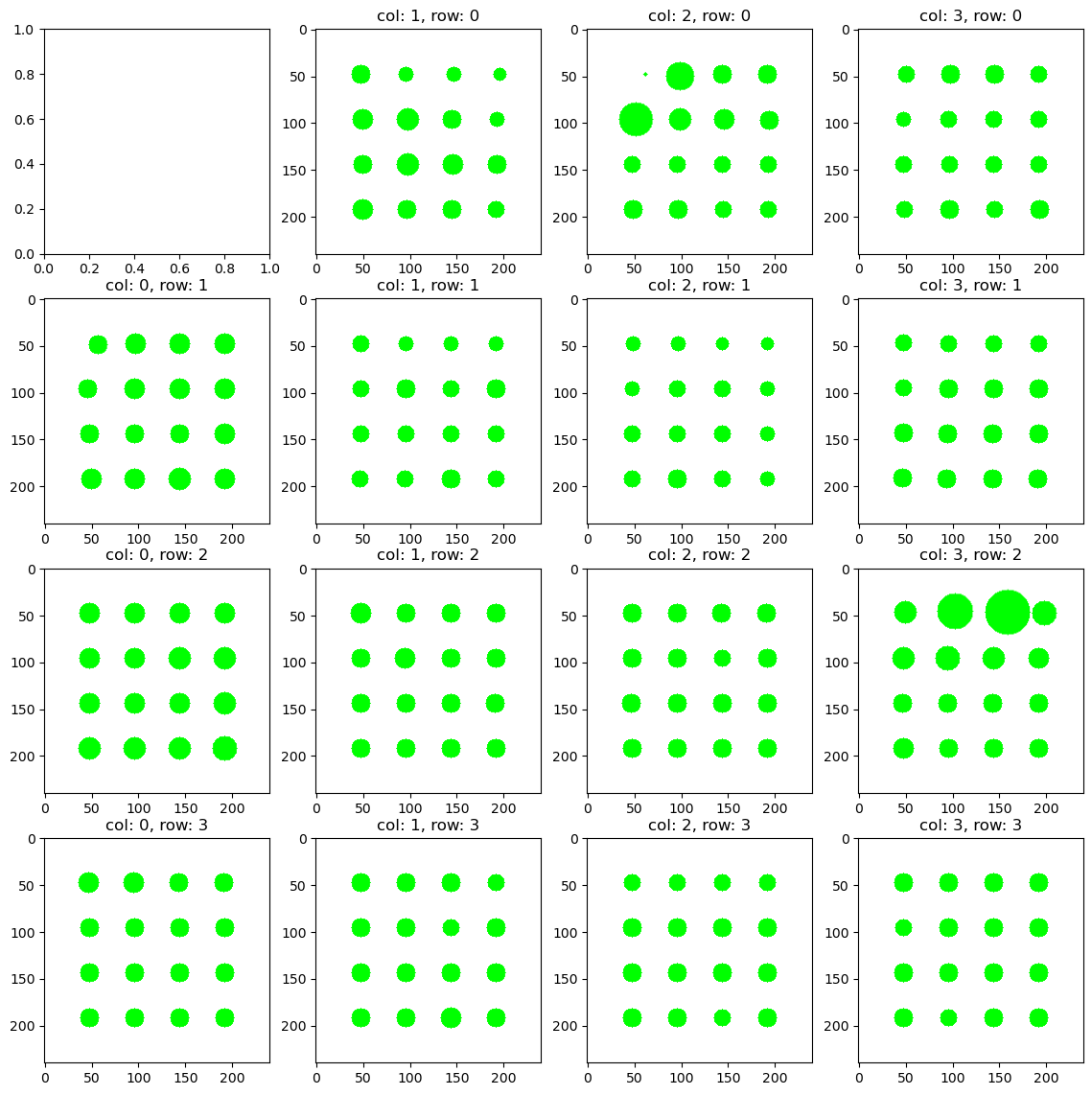

Here, tactile information is converted into tactile images and then representations are learned by using self-supervised learning algorithms.